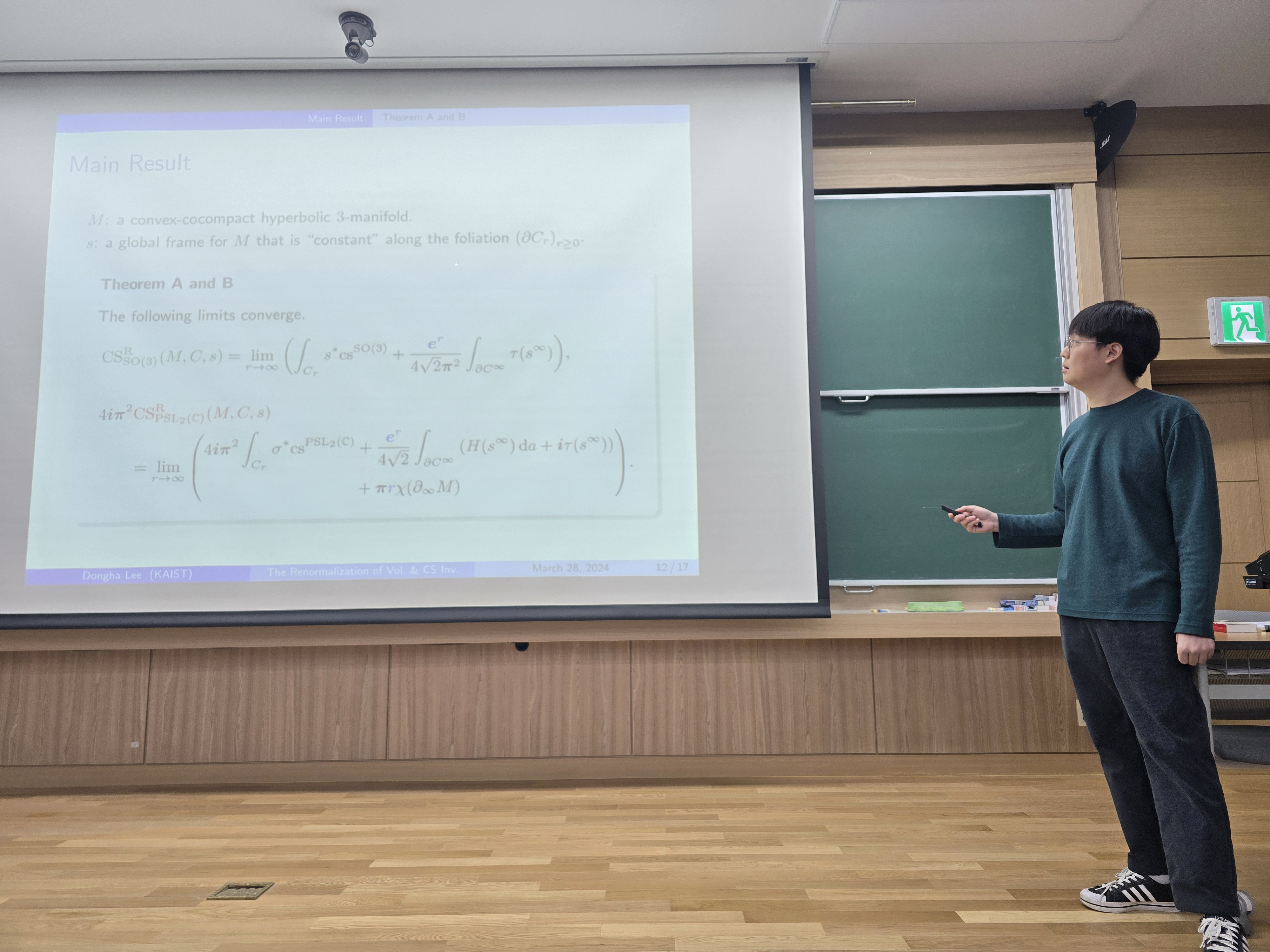

We uploaded photos from the 30th KMGS on March 28, 2024.

Thanks, Dongha Lee and all the participants!

Department of Mathematical Sciences, KAIST

We uploaded photos from the 30th KMGS on March 28, 2024.

Thanks, Dongha Lee and all the participants!

The 30th KMGS will be held on March 28th, Thursday, at Natural Science Building (E6-1) Room 1501.

We invite a speaker Dongha Lee from the Dept. of Mathematical Sciences, KAIST.

The abstract of the talk is as follows.

Slot (AM 11:50~PM 12:30)

[Speaker] 이동하 (Dongha Lee) from Dept. of Mathematical Sciences, KAIST, supervised by Prof. 박진성, Jinsung Park (KIAS) and Prof. 백형렬, Hyungryul Baik

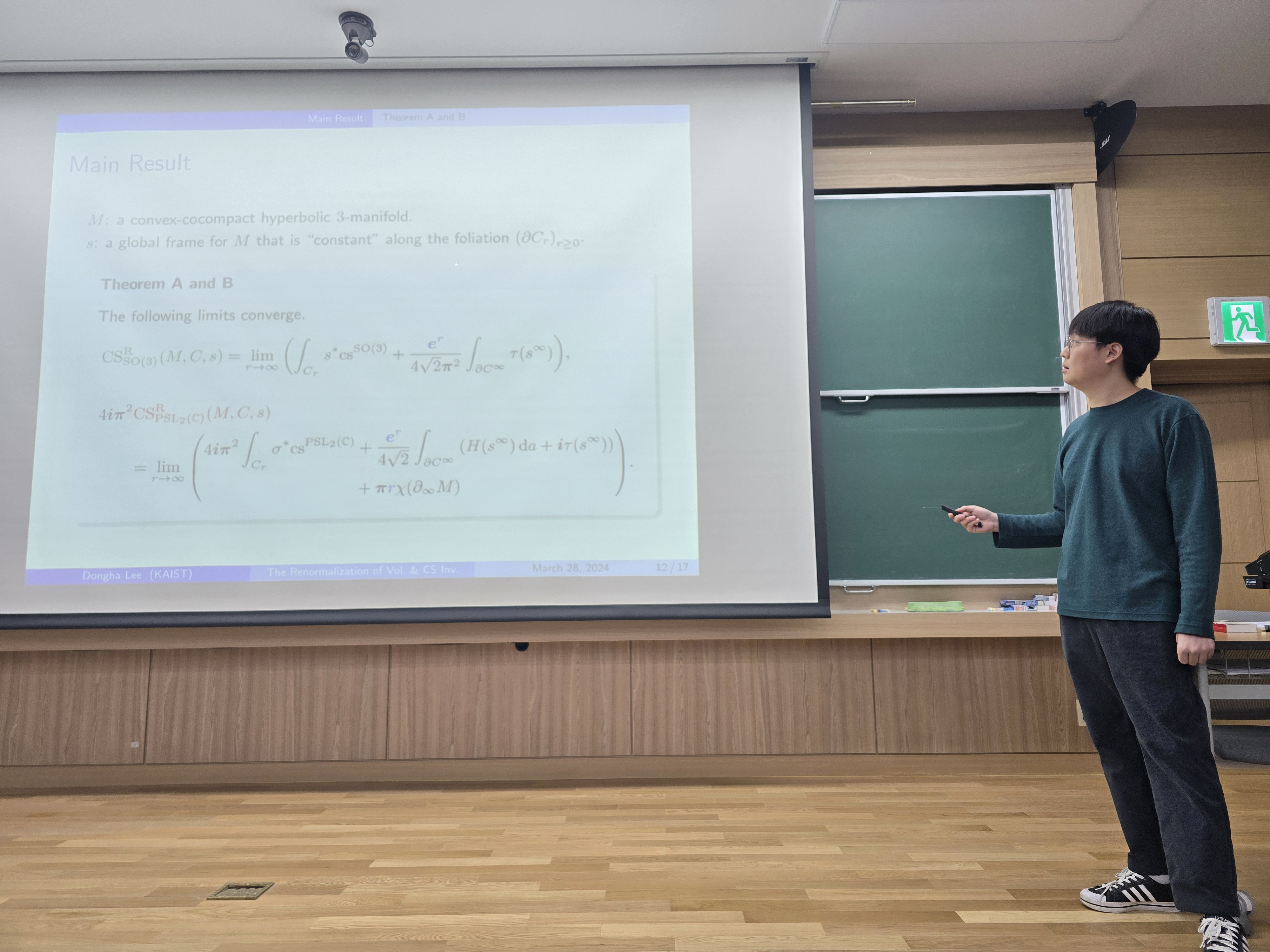

[Title] The Renormalization of Volume and Chern-Simons Invariant for Hyperbolic 3-Manifolds

[Discipline] Differential Geometry

[Abstract]

For hyperbolic manifolds, many interesting results support a deep relationship between hyperbolic volume and the Chern-Simons invariant. In this talk, we consider noncompact hyperbolic 3-manifolds having infinite volume. For these manifolds, there is a well-defined invariant called the renormalized volume which replaces classical volume. The talk will start from a gentle introduction to hyperbolic geometry and reach the renormalization of the Chern-Simons invariant, which has a close relationship with the renormalized hyperbolic volume.

[Language] English

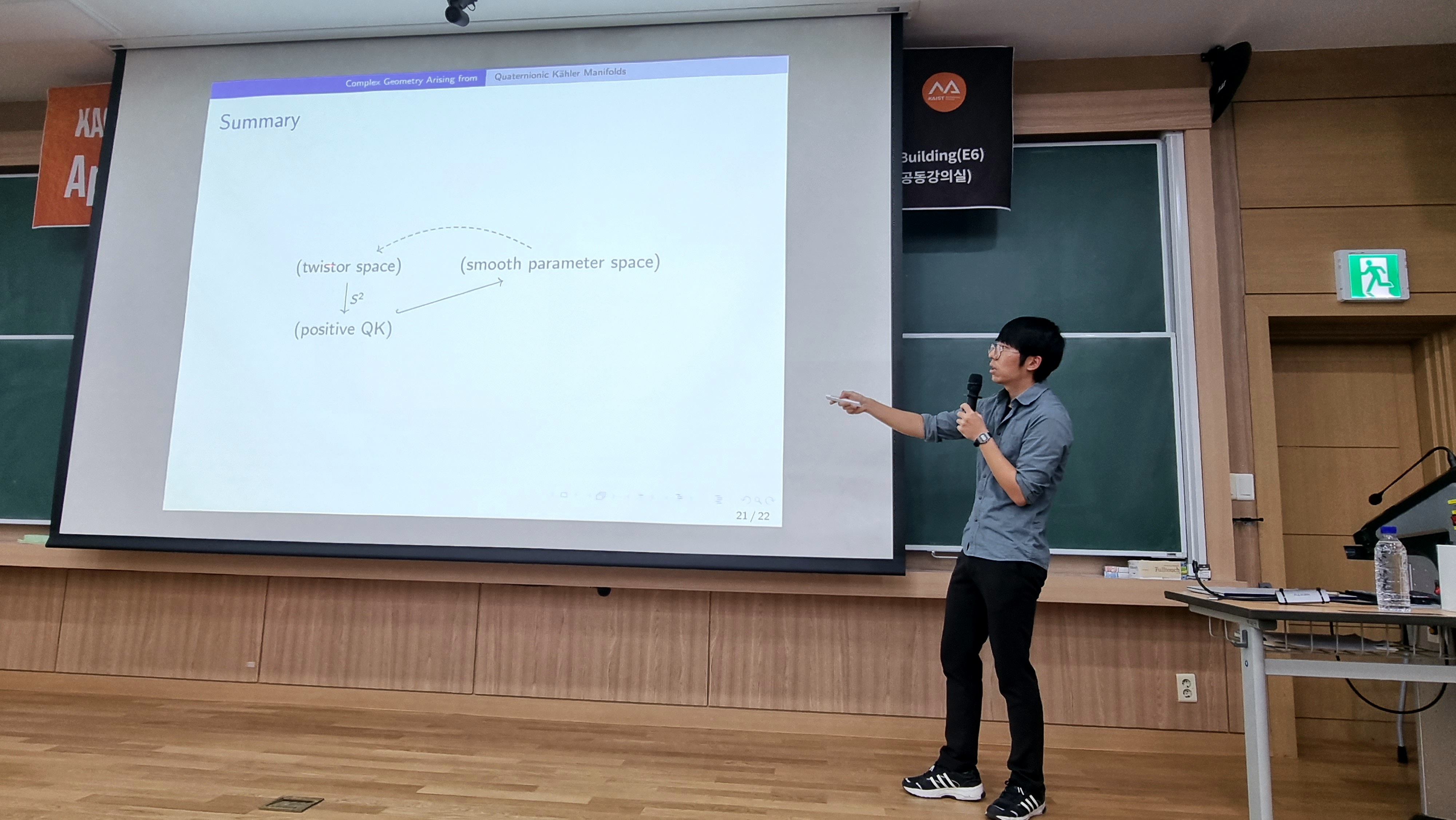

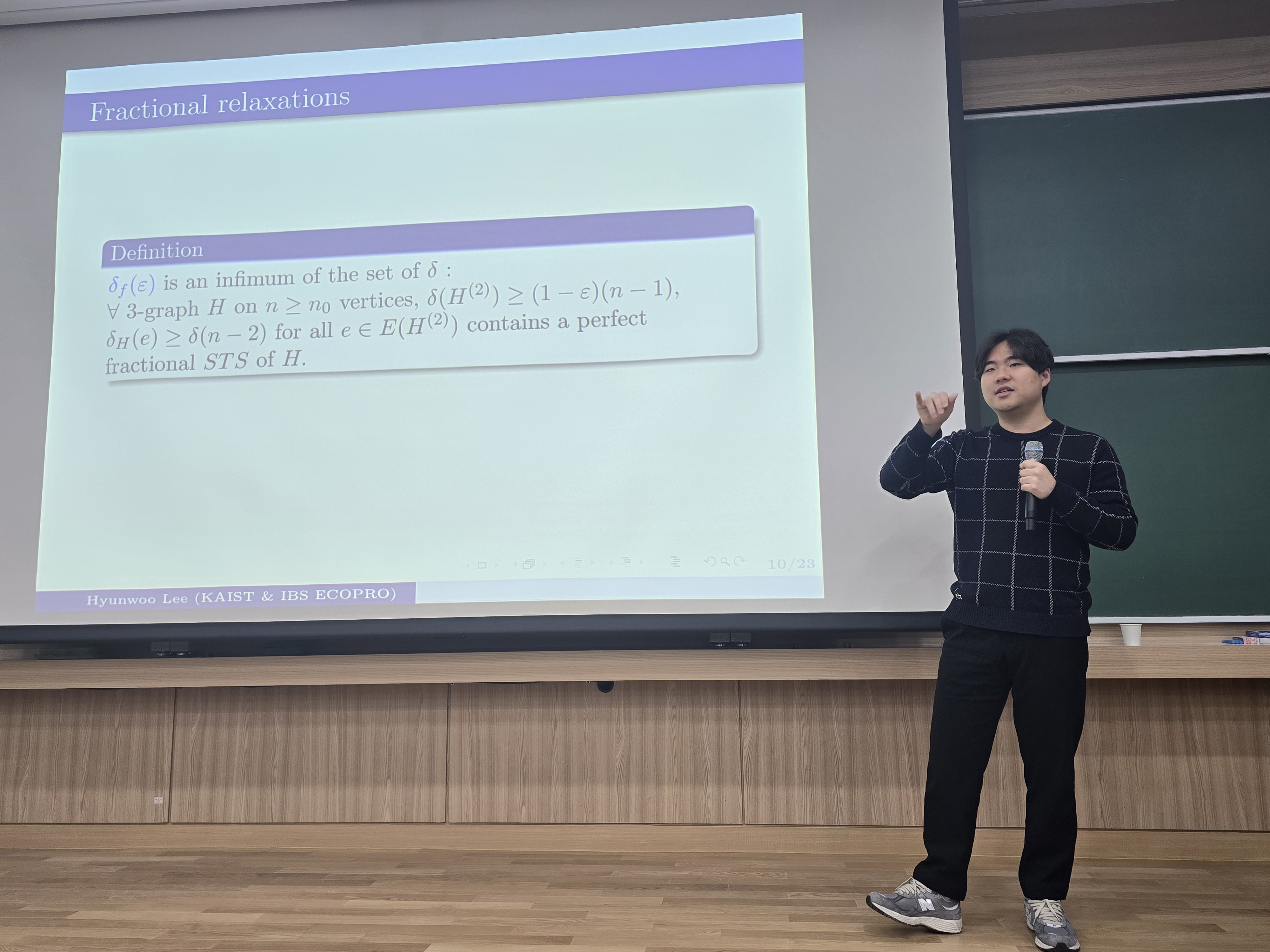

We uploaded photos from the 29th KMGS on March 14, 2024.

Thanks, Hyunwoo Lee and all the participants!

The 29th KMGS will be held on March 14th, Thursday, at Natural Science Building (E6-1) Room 1501.

We invite a speaker Hyunwoo Lee from the Dept. of Mathematical Sciences, KAIST.

The abstract of the talk is as follows.

[Speaker] 이현우 (Hyunwoo Lee) from Dept. of Mathematical Sciences, KAIST, supervised by Prof. 김재훈, Jaehoon Kim

[Title] Towards a high-dimensional Dirac’s theorem

[Discipline] Combinatorics

[Abstract]

Dirac’s theorem determines the sharp minimum degree threshold for graphs to contain perfect matchings and Hamiltonian cycles. There have been various attempts to generalize this theorem to hypergraphs with larger uniformity by considering hypergraph matchings and Hamiltonian cycles.

In this paper, we consider another natural generalization of the perfect matchings, Steiner triple systems. As a Steiner triple system can be viewed as a partition of pairs of vertices, it is a natural high-dimensional analogue of a perfect matching in graphs.

We prove that for sufficiently large integer $n$ with $n \equiv 1 \text{ or } 3 \pmod{6},$ any $n$-vertex $3$-uniform hypergraph $H$ with minimum codegree at least $\left(\frac{3 + \sqrt{57}}{12} + o(1) \right)n = (0.879… + o(1))n$ contains a Steiner triple system. In fact, we prove a stronger statement by considering transversal Steiner triple systems in a collection of hypergraphs.

We conjecture that the number $\frac{3 + \sqrt{57}}{12}$ can be replaced with $\frac{3}{4}$ which would provide an asymptotically tight high-dimensional generalization of Dirac’s theorem.

[Language] Korean

We are informing you of the schedule of the KAIST Math Graduate student Seminar(KMGS) 2024 Spring. We look forward to your attention and participation!

In this seminar, 6 talks will be held on Thursday from 11:50 to 12:40 in Room 1501 on the first floor of the Natural Science Building(E6-1).

Lunch will be provided after each talk.

Please apply through the QR code on the poster.

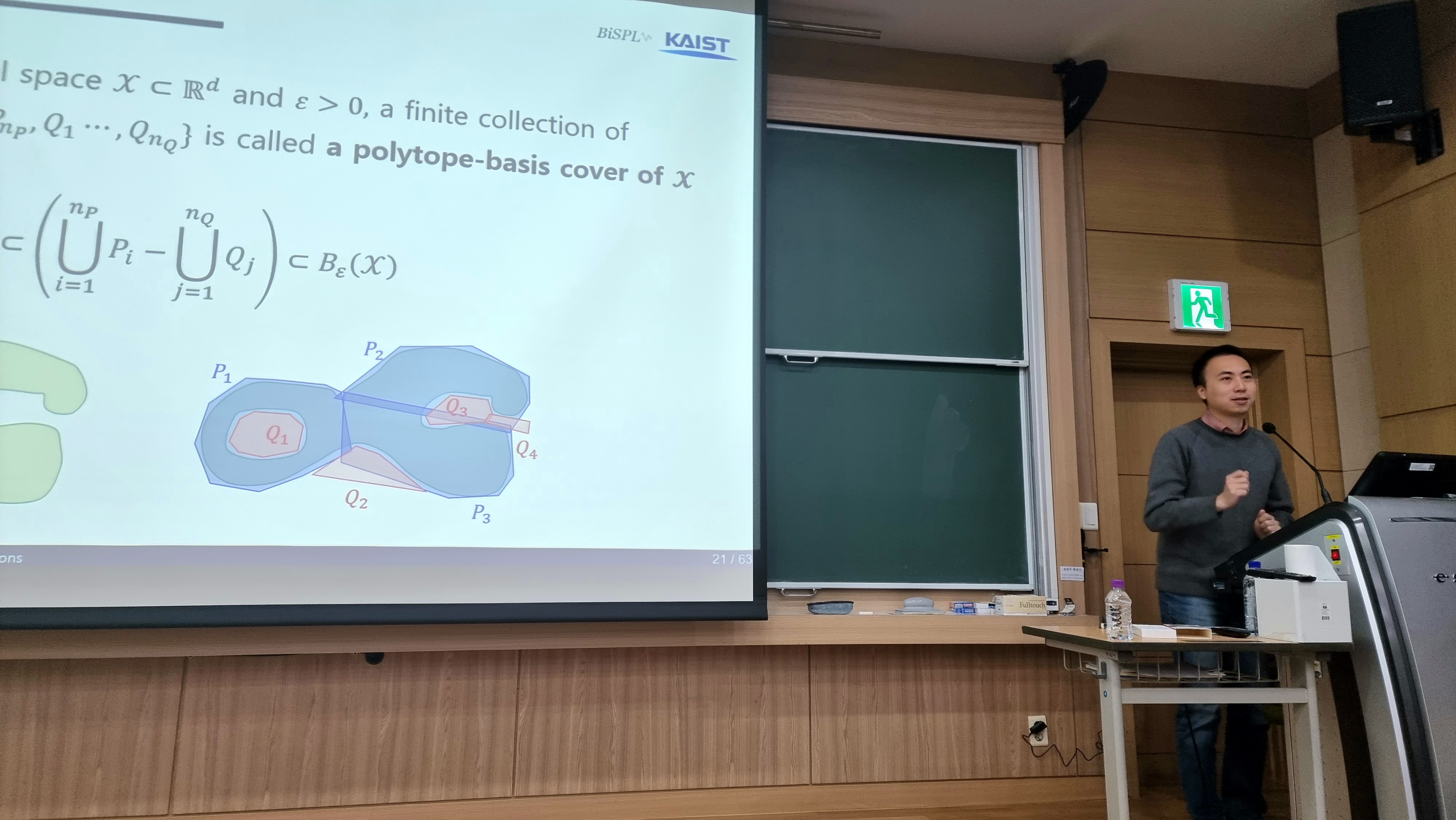

We uploaded photos from the 28th KMGS on November 30, 2023. Thanks, Sangmin Lee and all the participants!

The 28th KMGS will be held on November 30th, Thursday, at Natural Science Building (E6-1) Room 1501.

We invite a speaker Sangmin Lee from the Dept. of Mathematical Sciences, KAIST.

The abstract of the talk is as follows.

Slot (AM 11:50~PM 12:30)

[Speaker] 이상민 (Sangmin Lee) from Dept. of Mathematical Sciences, KAIST, supervised by Prof. 예종철 교수님 (Jong Chul Ye)

[Title] Data Topology and Geometry-dependent Bounds on ReLU Network Widths

[Discipline] Machine Learning

[Abstract]

While deep neural networks (DNNs) have been widely used in numerous applications over the past few decades, their underlying theoretical mechanisms remain incompletely understood. In this presentation, we propose a geometrical and topological approach to understand how deep ReLU networks work on classification tasks. Specifically, we provide lower and upper bounds of neural network widths based on the geometrical and topological features of the given data manifold. We also prove that irrespective of whether the mean square error (MSE) loss or binary cross entropy (BCE) loss is employed, the loss landscape has no local minimum.

[Language] Korean but English if it is requested

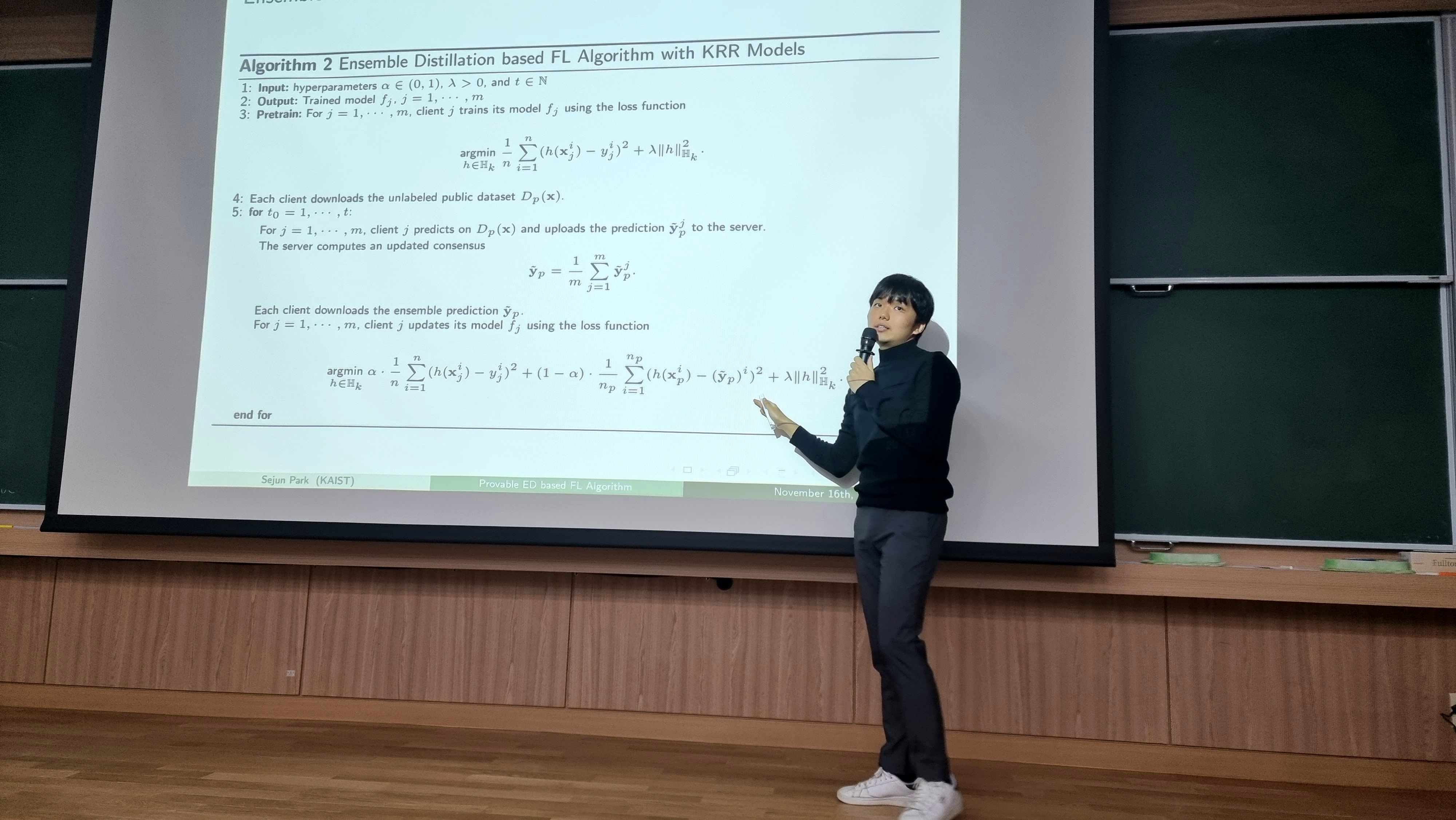

We uploaded photos from the 27th KMGS on November 16, 2023. Thanks, Sejun Park and all the participants!

The 27th KMGS will be held on November 16th, Thursday, at Natural Science Building (E6-1) Room 1501.

We invite a speaker Sejun Park from the Dept. of Mathematical Sciences, KAIST.

The abstract of the talk is as follows.

Slot (AM 11:50~PM 12:30)

[Speaker] 박세준 (Sejun Park) from Dept. of Mathematical Sciences, KAIST, supervised by Prof. 황강욱 교수님 (Ganguk Hwang)

[Title] Provable Ensemble Distillation based Federated Learning Algorithm

[Discipline] Machine Learning

[Abstract]

In this talk, we will primarily discuss the theoretical analysis of knowledge distillation based federated learning algorithms. Before we explore the main topics, we will introduce the basic concepts of federated learning and knowledge distillation. Subsequently, we will understand a nonparametric view of knowledge distillation based federated learning algorithms and introduce generalization analysis of these algorithms based on the theory of regularized kernel regression methods.

[Language] Korean

We uploaded photos from the 26th KMGS on November 2, 2023. Thanks, Minseong Kwon and all the participants!